The case against predicting tokens to build AGI

NeutralArtificial Intelligence

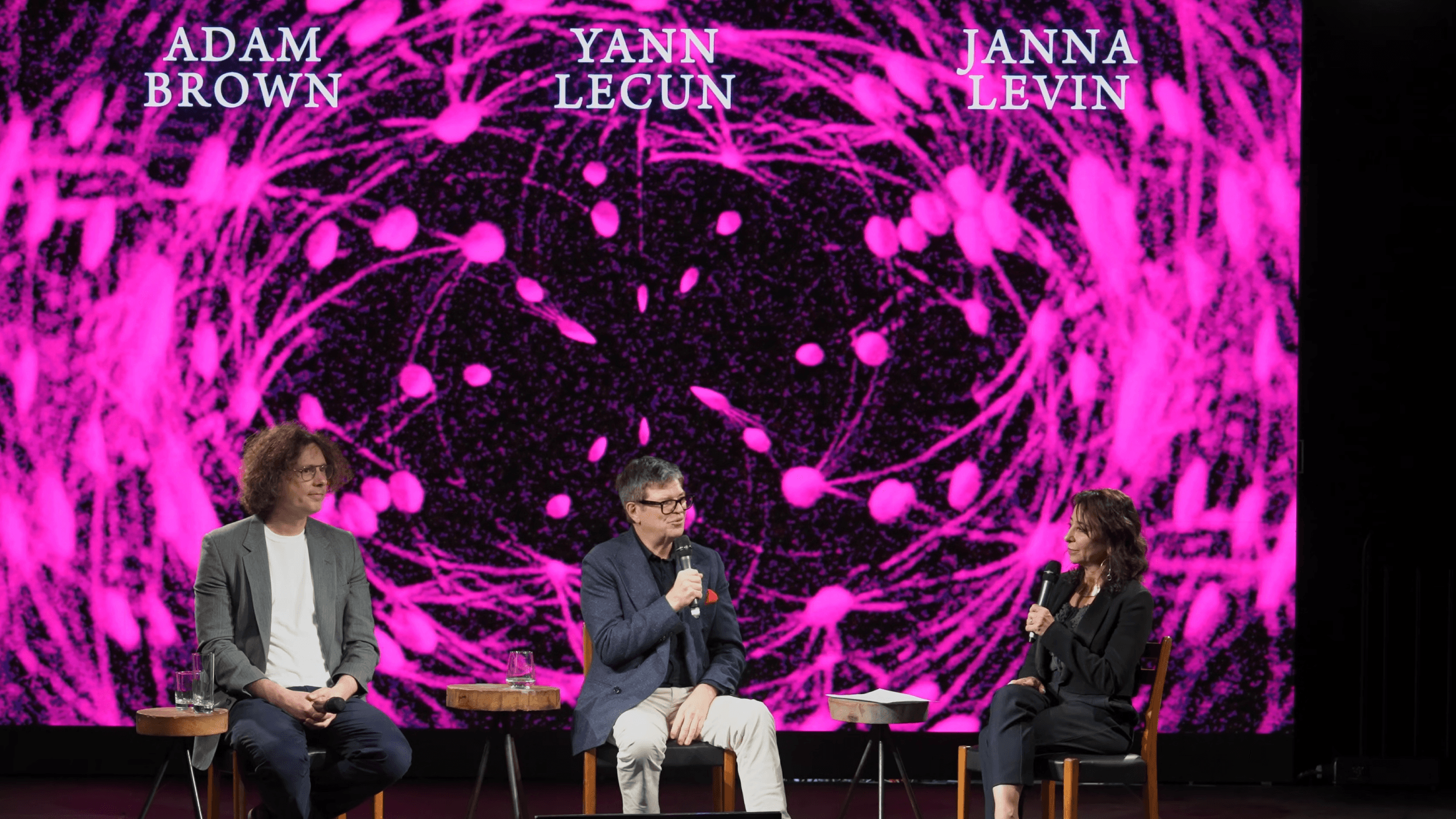

- In a recent debate, Yann LeCun, Meta's Chief AI Scientist, argued against the efficacy of Large Language Models (LLMs) in achieving human-like intelligence, stating that their predictive nature represents a dead end in the quest for Artificial General Intelligence (AGI). This discussion took place alongside DeepMind researcher Adam Brown, highlighting fundamental concerns regarding the limitations of current AI models.

- LeCun's departure from Meta, following a restructuring of the company's AI initiatives, underscores a significant shift in leadership and vision within the organization. His new venture aims to focus on developing advanced AI systems that can understand the physical world, moving away from mere text generation.

- This transition reflects broader debates within the AI community regarding the future of AGI, with contrasting views on the potential of generative models versus world models. While some experts predict advancements in minimal AGI by 2028, LeCun's pivot suggests a growing recognition of the need for AI systems that can reason and plan, rather than solely predict.

— via World Pulse Now AI Editorial System