Meet the Group Breaking People Out of AI Delusions

NegativeArtificial Intelligence

- A group is actively working to help individuals recognize and break free from their delusions related to artificial intelligence, particularly those who have become overly reliant on AI tools like ChatGPT. This phenomenon highlights a growing concern about the psychological impact of AI on users, as some individuals no longer feel the need for human interaction.

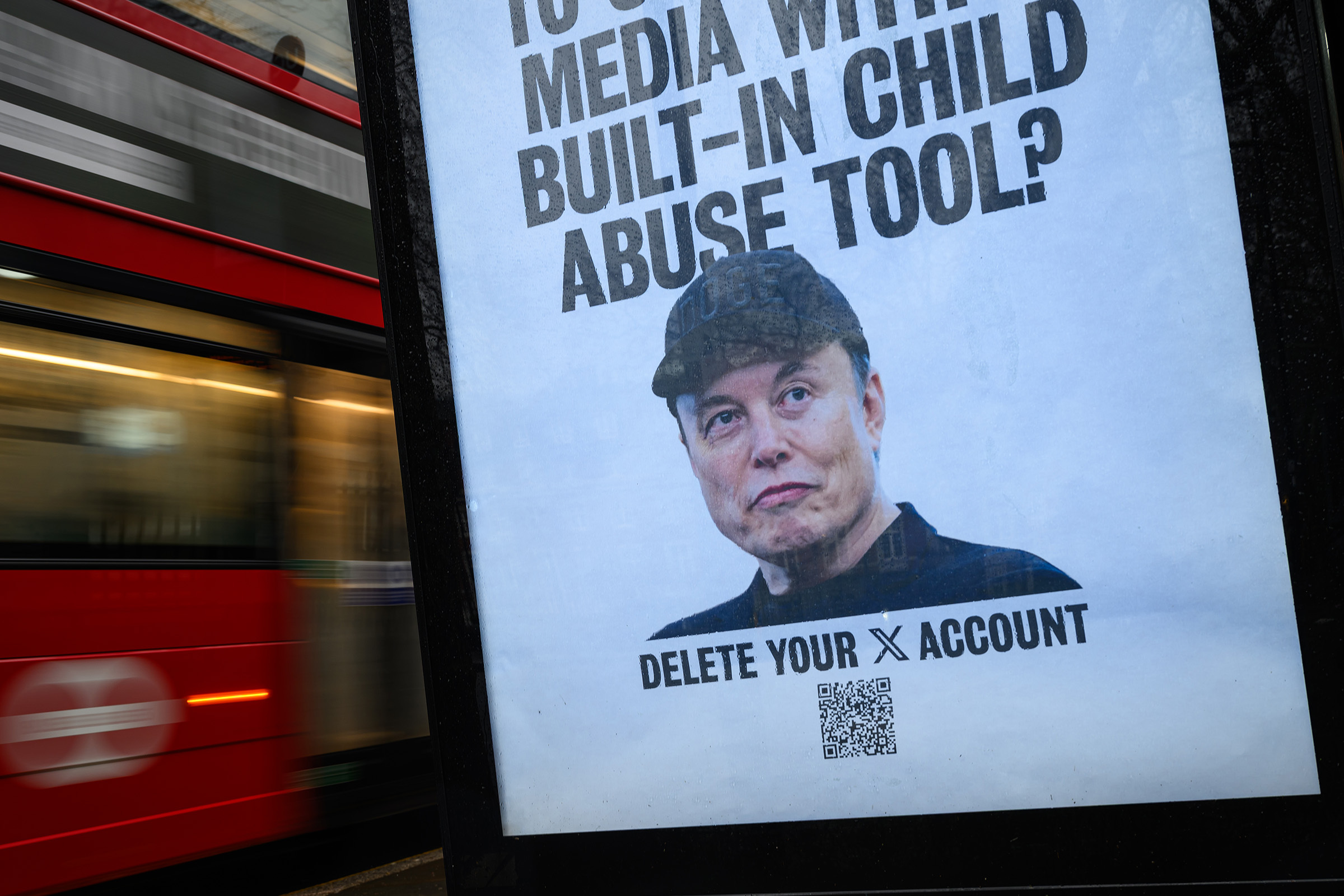

- The implications of this movement are significant, as it raises questions about the role of AI in mental health and social interactions, particularly for vulnerable populations who may be misled by AI's capabilities.

- This development reflects a broader trend of increasing reliance on AI for emotional support, especially among teens, which has been linked to inadequate responses from AI chatbots regarding mental health issues. The ongoing scrutiny of AI's effectiveness and ethical considerations in sensitive contexts underscores the urgent need for responsible AI development and user education.

— via World Pulse Now AI Editorial System