Scientists Discover “Universal” Jailbreak for Nearly Every AI, and the Way It Works Will Hurt Your Brain

NeutralArtificial Intelligence

- Scientists have discovered a 'universal' jailbreak method that can manipulate nearly every artificial intelligence system, revealing vulnerabilities that could have significant implications for AI security and reliability. This breakthrough highlights the ongoing challenges in managing AI technologies effectively.

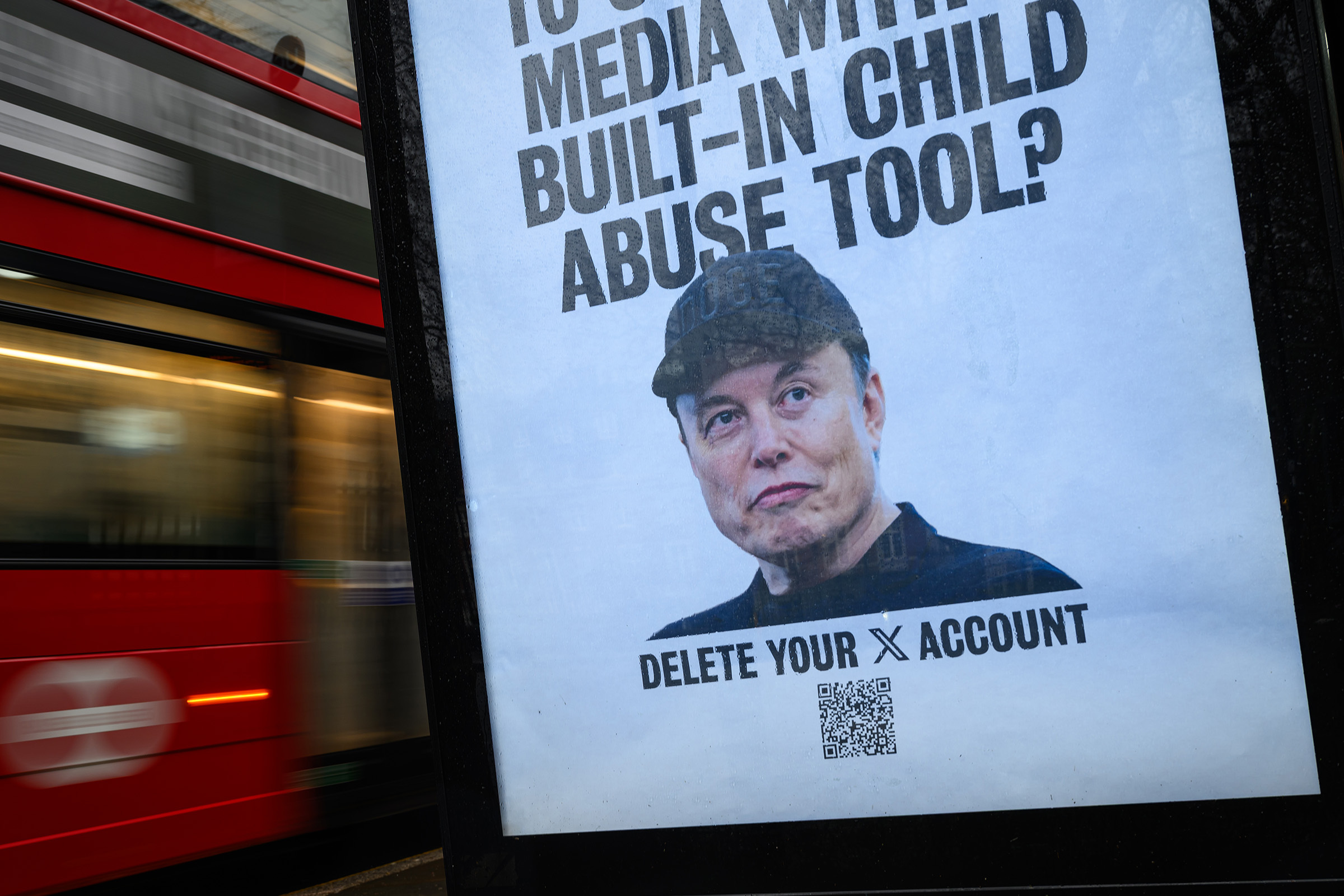

- The discovery raises concerns about the potential misuse of AI systems, as the ability to bypass their restrictions could lead to unintended consequences, including ethical dilemmas and security risks. This situation underscores the importance of transparency and accountability in AI development.

- This development reflects a growing unease regarding AI's role in society, as evidenced by recent trends where individuals, particularly teens, show a preference for interacting with AI over real people. Additionally, incidents involving AI-generated content causing legal and reputational issues further emphasize the need for careful oversight and regulation in the rapidly evolving AI landscape.

— via World Pulse Now AI Editorial System