Let's Poison Your LLM Application: A Security Wake-Up Call

NegativeArtificial Intelligence

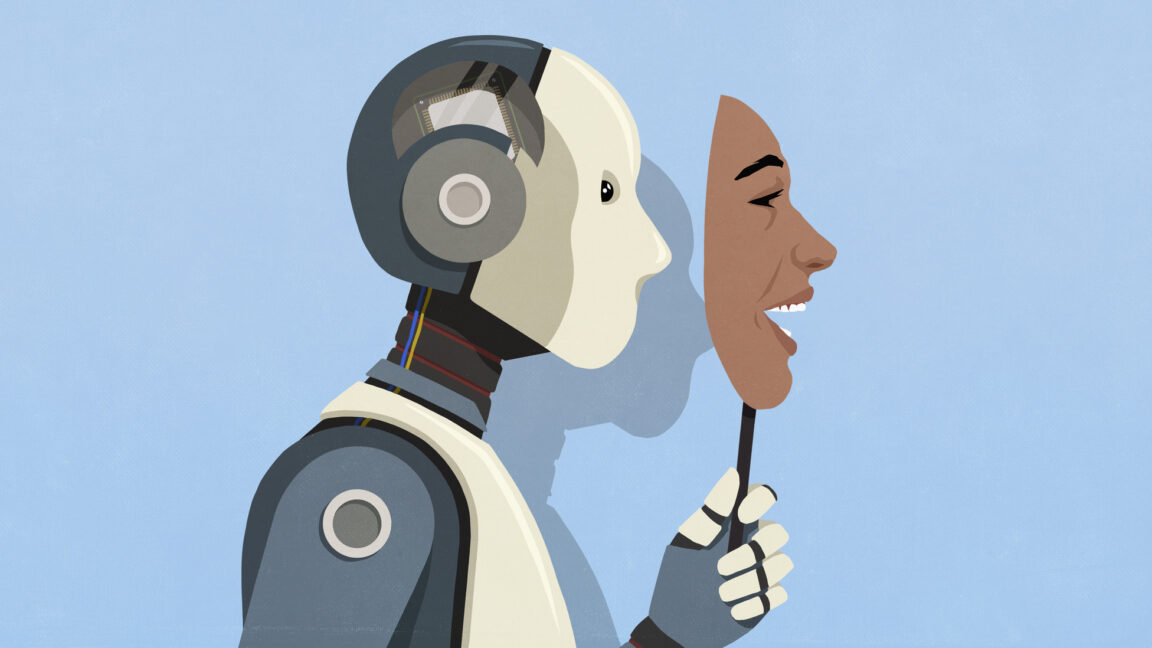

As AI applications rapidly expand, the integration of Large Language Models (LLMs) raises serious cybersecurity concerns that many organizations are ignoring. Prompt injection attacks pose a significant threat, potentially compromising sensitive operations. This article serves as a crucial reminder for businesses to prioritize security measures when deploying AI technologies, ensuring they protect their systems from emerging vulnerabilities.

— Curated by the World Pulse Now AI Editorial System