AI has read everything on the internet, now it's watching how we live to train robots

NeutralArtificial Intelligence

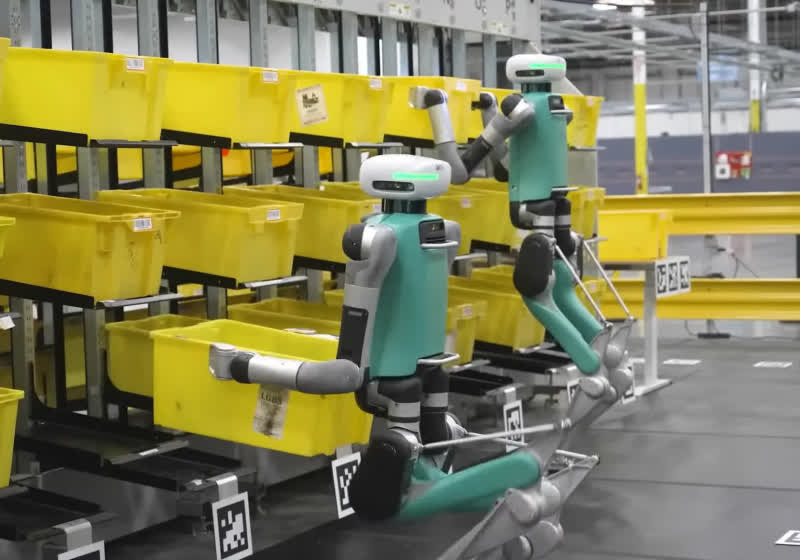

In Karur, India, Naveen Kumar is using his skills to help train robots by demonstrating precise hand movements instead of writing code. This innovative approach highlights how AI is evolving beyond just processing information from the internet to observing and learning from human actions. This shift is significant as it opens new avenues for AI development, making robots more adept at understanding and mimicking human behavior, which could lead to advancements in various industries.

— via World Pulse Now AI Editorial System