INT v.s. FP: A Comprehensive Study of Fine-Grained Low-bit Quantization Formats

PositiveArtificial Intelligence

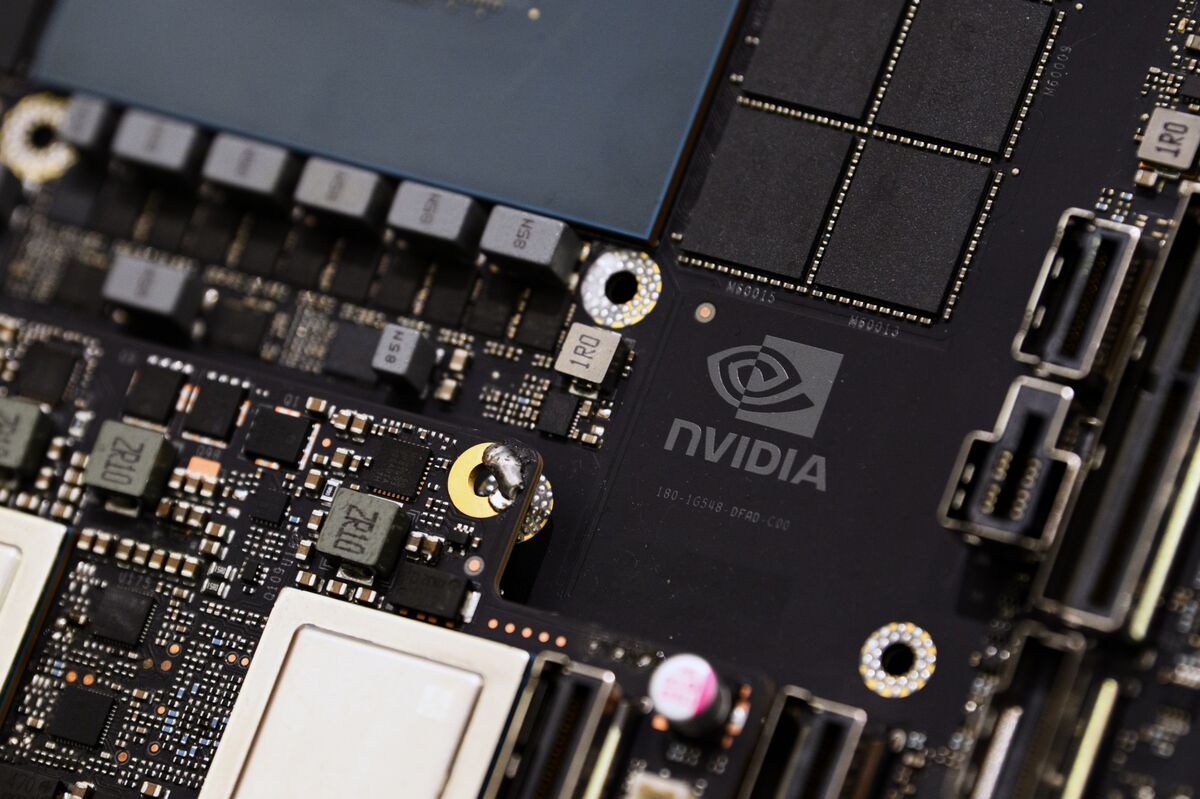

A new study has emerged that compares low-bit quantization formats, particularly focusing on floating-point (FP) and integer (INT) methods. As AI hardware, like Nvidia's Blackwell architecture, increasingly adopts low-precision formats to manage activation outliers in large language models, this research provides essential insights. It addresses a significant gap in the field by offering a systematic comparison, which is crucial for guiding algorithm and hardware co-design. This could lead to more efficient AI systems and better performance in handling complex tasks.

— Curated by the World Pulse Now AI Editorial System