Hybrid Learning and Optimization-Based Dynamic Scheduling for DL Workloads on Heterogeneous GPU Clusters

PositiveArtificial Intelligence

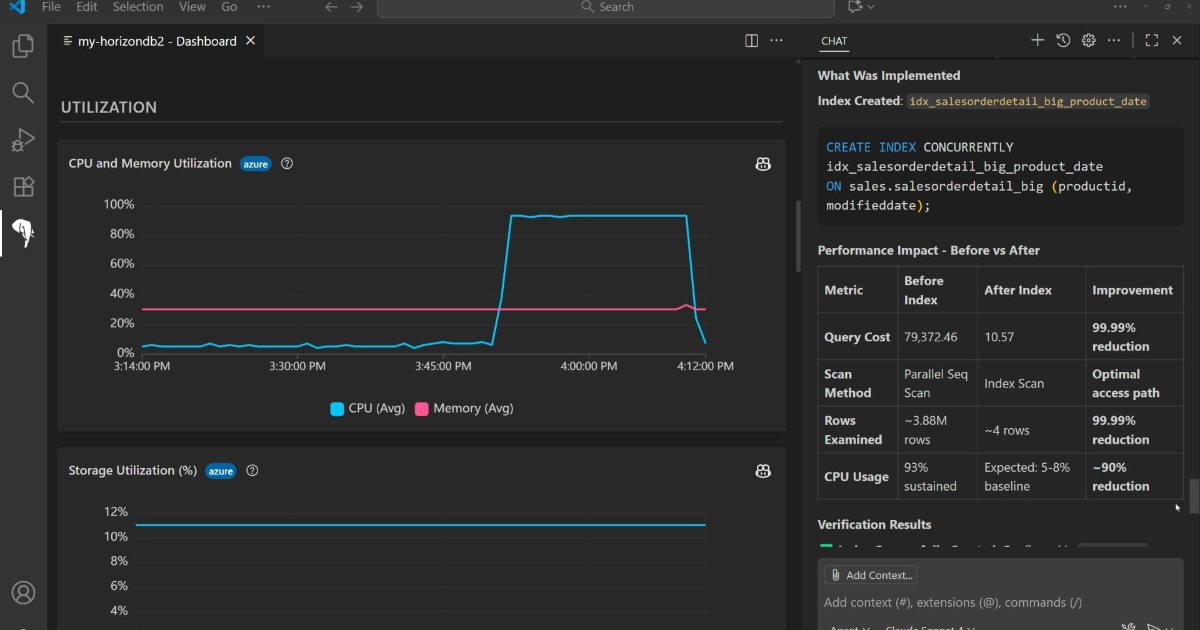

- RLTune, a new reinforcement learning-based scheduling framework, has been developed to optimize deep learning workloads on heterogeneous GPU clusters, addressing challenges posed by the increasing complexity and diversity of GPU resources. This framework enhances job prioritization and resource allocation, significantly improving GPU utilization and reducing job completion times and queueing delays.

- This advancement is crucial for companies like Microsoft, which rely on efficient GPU scheduling to manage large-scale deep learning tasks. By improving resource utilization by up to 20% and reducing queueing delays by up to 81%, RLTune positions Microsoft to better handle the growing demands of AI workloads in cloud environments.

- The development of RLTune reflects a broader trend in the tech industry towards optimizing resource management in AI applications. As reliance on cloud-based solutions increases, the need for efficient scheduling mechanisms becomes paramount, especially as organizations seek to leverage large language models and other AI technologies that require substantial computational resources.

— via World Pulse Now AI Editorial System