Robust Reinforcement Learning from Human Feedback for Large Language Models Fine-Tuning

PositiveArtificial Intelligence

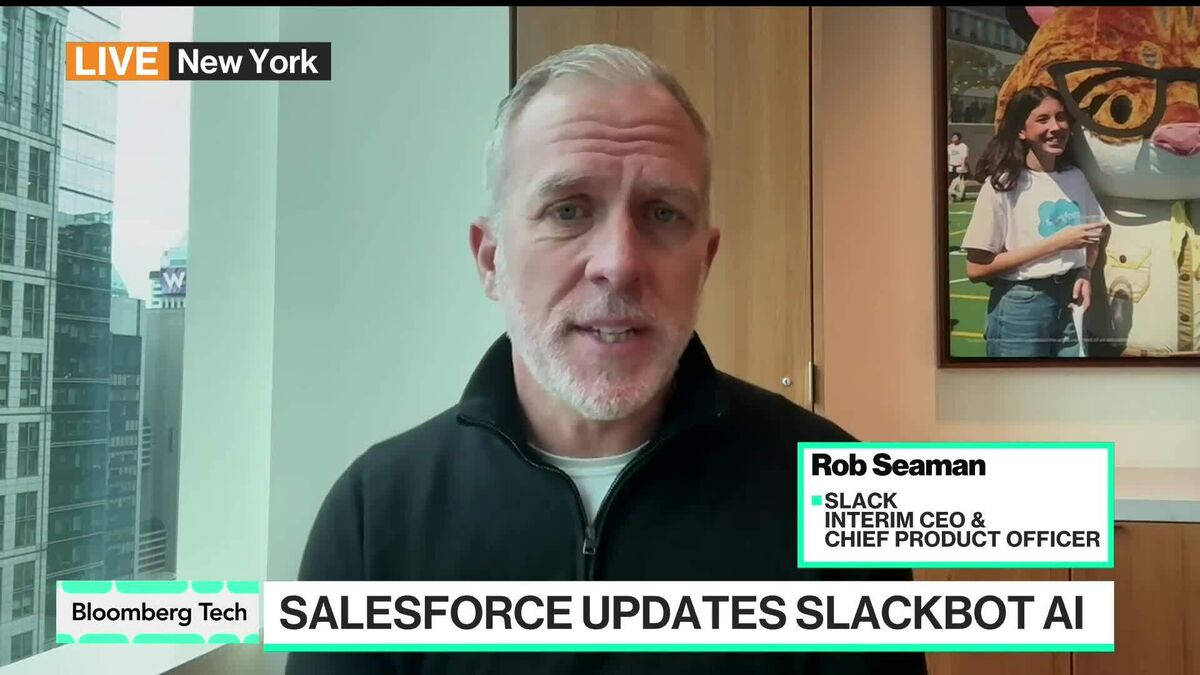

- A new algorithm for reinforcement learning from human feedback (RLHF) has been proposed to enhance the alignment of large language models (LLMs) with human preferences, addressing limitations in traditional methods that rely on the Bradley

- This development is significant as it offers a more reliable approach to fine

- The advancement highlights ongoing challenges in ensuring LLMs accurately reflect human preferences, amidst discussions on the truthfulness and calibration of LLM outputs, as well as the need for robust reward models that can adapt to complex human judgments.

— via World Pulse Now AI Editorial System