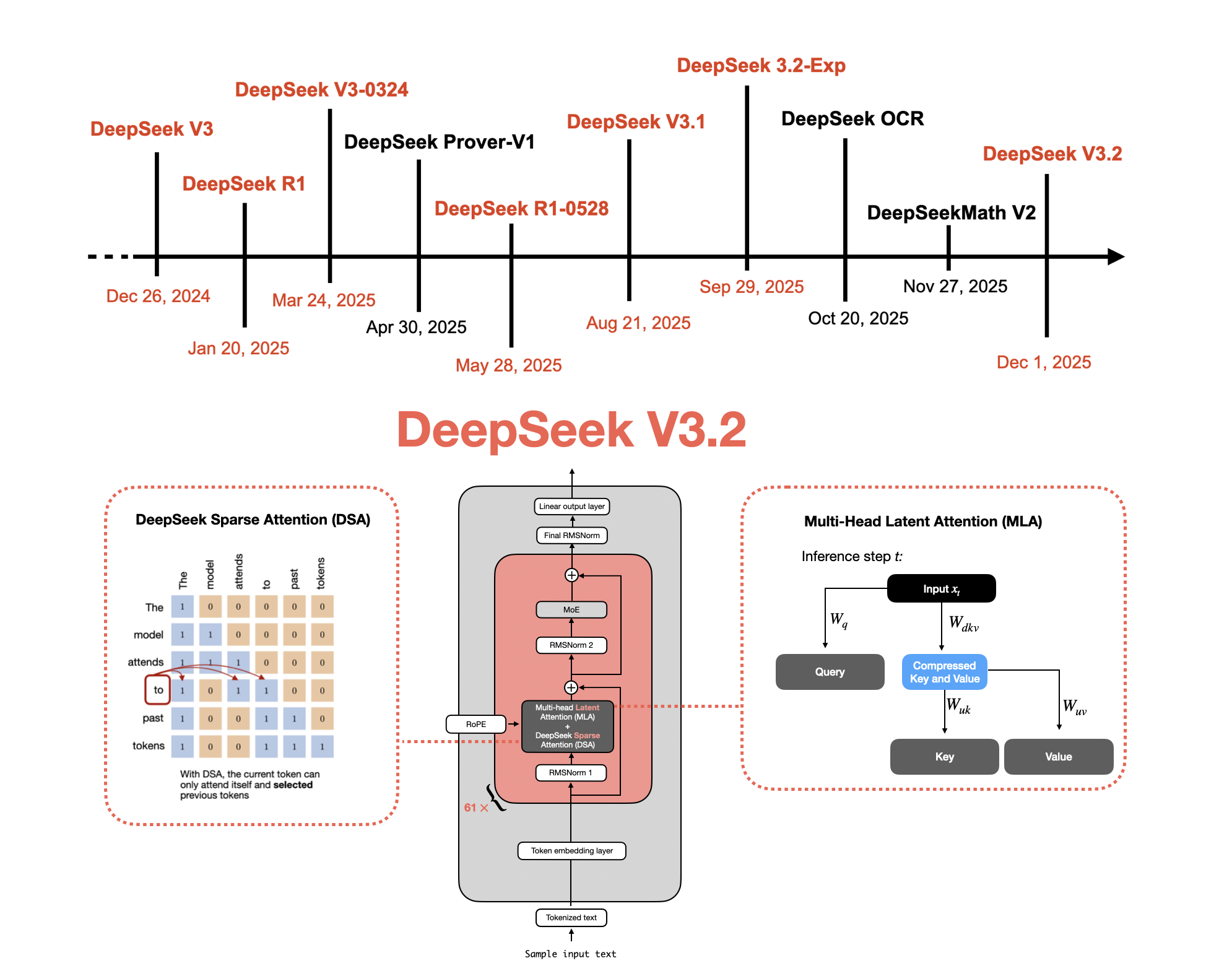

A Technical Tour of the DeepSeek Models from V3 to V3.2

NeutralArtificial Intelligence

- DeepSeek has showcased the evolution of its flagship open-weight models from V3 to V3.2, highlighting advancements in artificial intelligence capabilities. This technical tour provides insights into the enhancements made to the models, which are designed to compete effectively in the AI landscape.

- The development of the V3.2 model is significant for DeepSeek as it positions the company to challenge established players like Google and OpenAI, reflecting its commitment to innovation and competitiveness in the rapidly evolving AI sector.

- This evolution of DeepSeek's models underscores a broader trend in the AI industry, where companies are racing to develop advanced systems capable of complex reasoning and problem-solving, amidst increasing competition and the need for cutting-edge technology.

— via World Pulse Now AI Editorial System