Meta's SAM 3 segmentation model blurs the boundary between language and vision

PositiveArtificial Intelligence

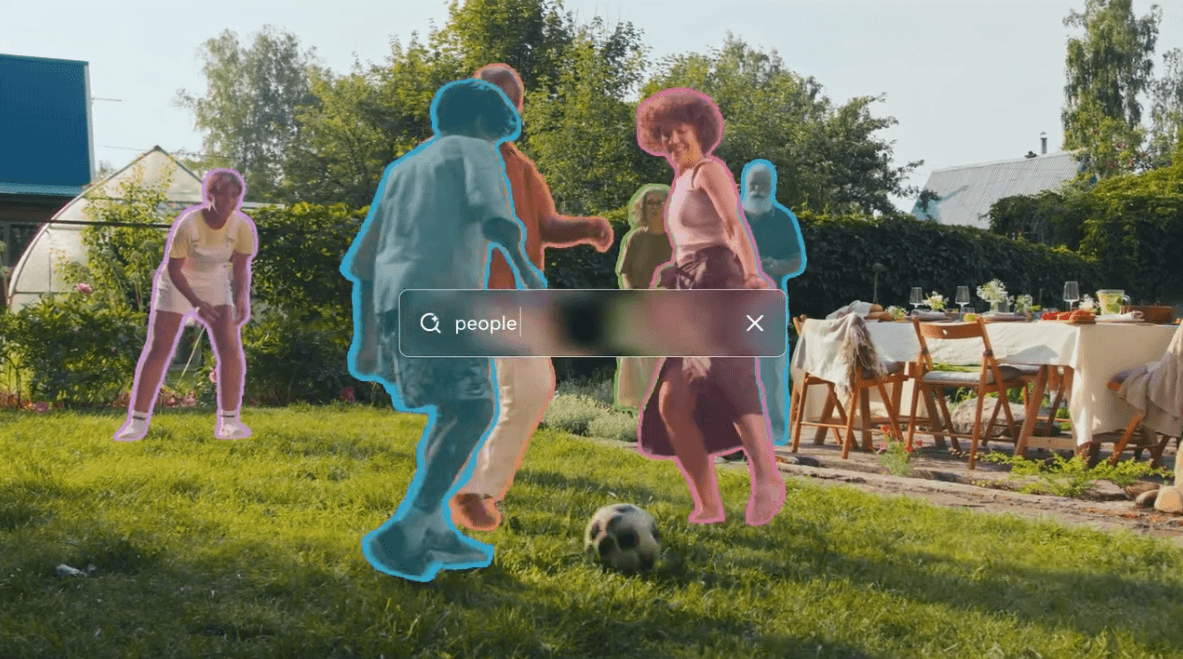

- Meta has unveiled the third generation of its Segment Anything Model (SAM 3), which utilizes an open vocabulary to enhance its understanding of images and videos. This model distinguishes itself from traditional segmentation models by employing a novel training method that integrates both human and AI annotators.

- The introduction of SAM 3 is significant for Meta as it represents a leap forward in AI capabilities, allowing for more flexible and nuanced segmentation tasks. This advancement could enhance Meta's position in the competitive AI landscape, particularly in applications requiring sophisticated image and video analysis.

- The development of SAM 3 aligns with ongoing trends in AI, where companies are increasingly focusing on models that blur the lines between different modalities, such as language and vision. This shift is echoed in other recent innovations, including Google's Nano Banana Pro and various self-supervised learning models, indicating a broader movement towards more integrated and versatile AI systems.

— via World Pulse Now AI Editorial System