Anthropic Has a Plan to Keep Its AI From Building a Nuclear Weapon. Will It Work?

NeutralTechnology

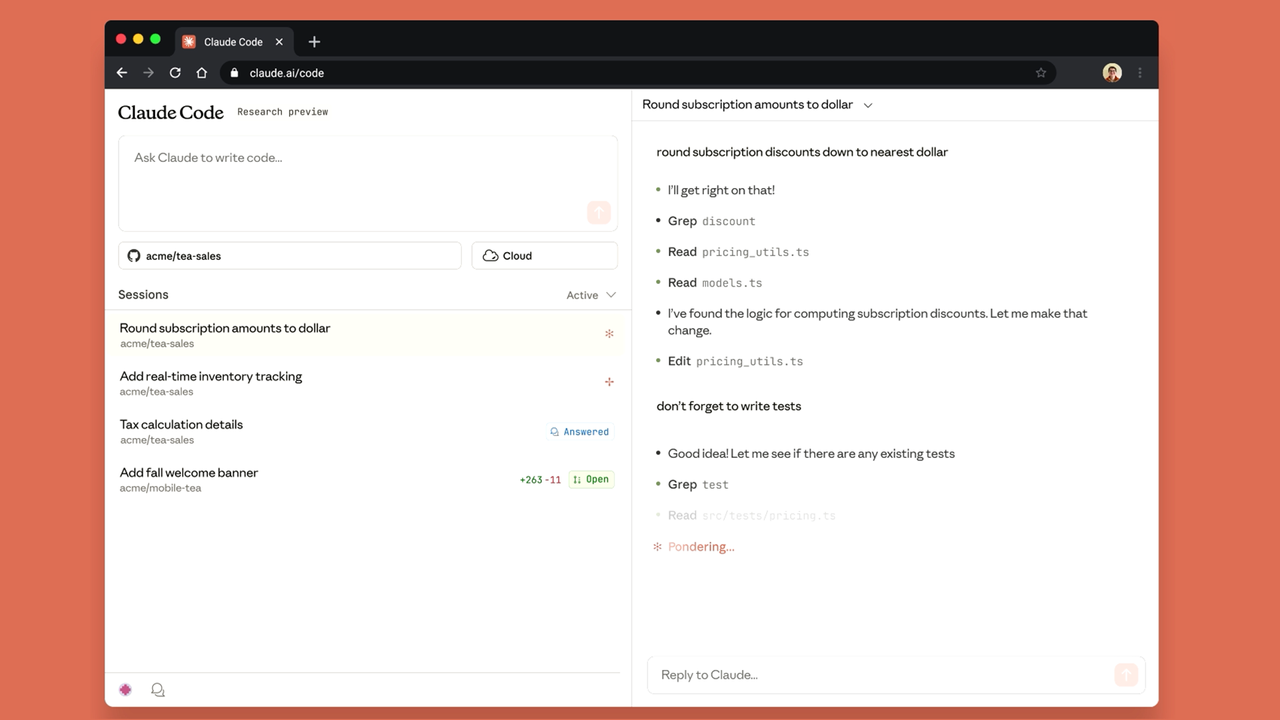

Anthropic has teamed up with the US government to implement a filter designed to prevent its AI, Claude, from assisting in the construction of nuclear weapons. This initiative raises important questions about AI safety and the effectiveness of such measures. While some experts believe this is a crucial step in ensuring responsible AI use, others are skeptical about whether it will truly provide the necessary protection. The outcome of this collaboration could have significant implications for the future of AI governance and security.

— Curated by the World Pulse Now AI Editorial System