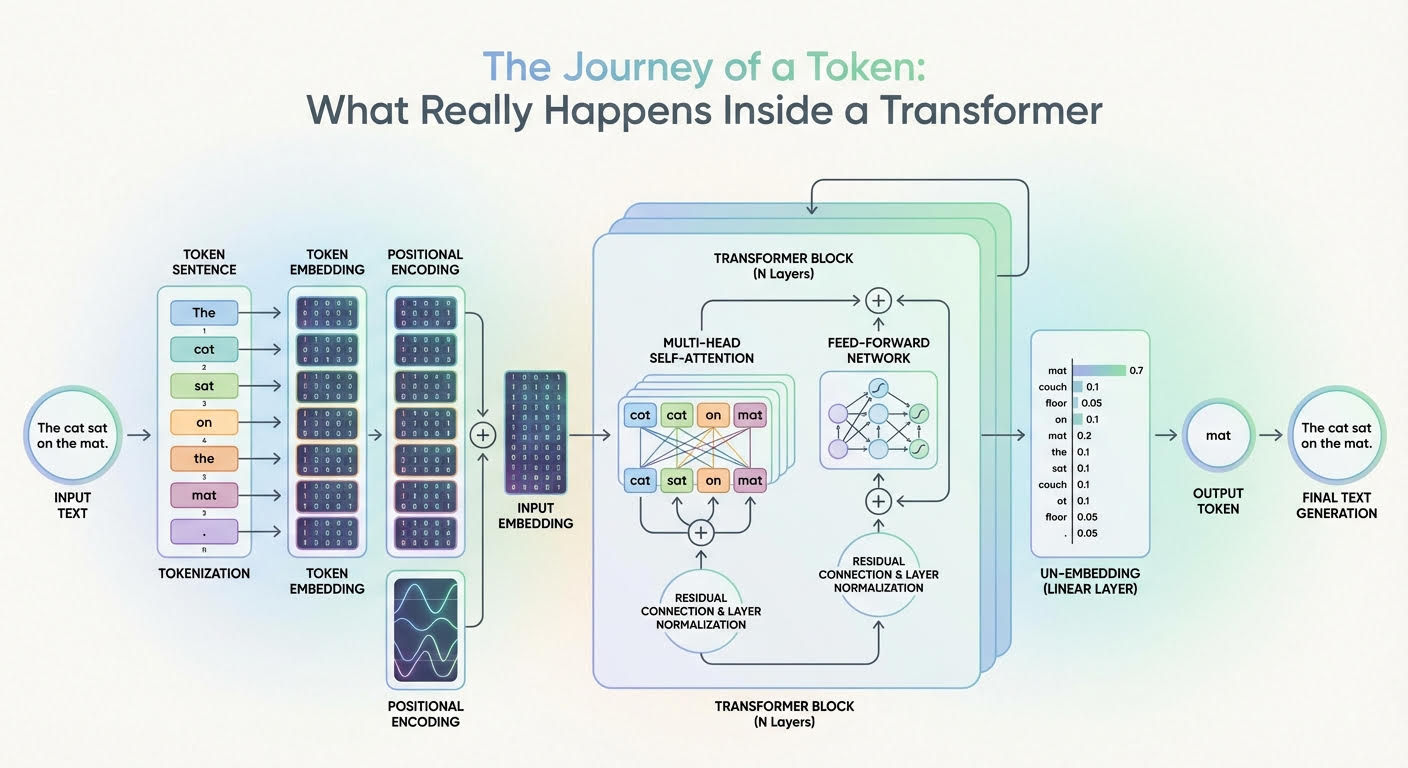

The Journey of a Token: What Really Happens Inside a Transformer

NeutralArtificial Intelligence

- Large language models (LLMs) utilize the transformer architecture, a sophisticated deep neural network that processes input as sequences of token embeddings. This architecture is crucial for enabling LLMs to understand and generate human-like text, making it a cornerstone of modern artificial intelligence applications.

- The development of transformer architectures significantly enhances the capabilities of LLMs, allowing for improved performance in natural language processing tasks. This advancement positions organizations leveraging LLMs at the forefront of AI innovation, potentially leading to more effective communication tools and applications.

- The exploration of transformer architectures is part of a broader trend in AI research, where advancements such as quantum computing and novel regularization techniques are being investigated to optimize model performance. These developments highlight the ongoing efforts to refine LLMs and address challenges like over-refusal in output generation, ensuring that AI systems remain safe and effective.

— via World Pulse Now AI Editorial System