Last Week in AI #328 - DeepSeek 3.2, Mistral 3, Trainium3, Runway Gen-4.5

PositiveArtificial Intelligence

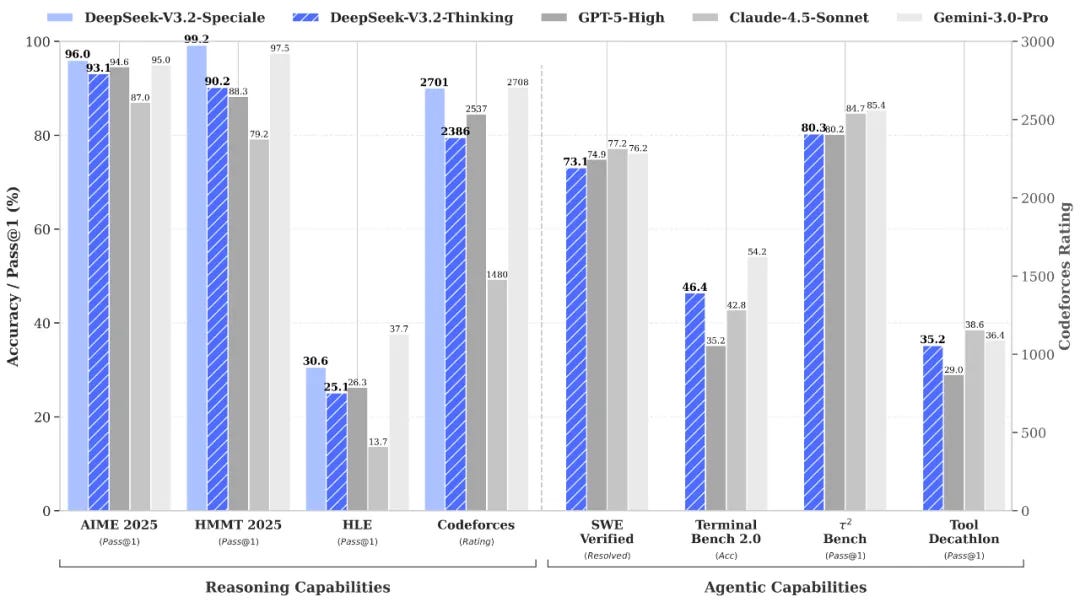

- DeepSeek has released new reasoning models, including updates from its V3 to V3.2 versions, while Mistral has launched the Mistral 3 family of open-source models designed for various platforms, marking significant advancements in AI technology. These developments highlight the competitive landscape in the AI sector, where companies are striving to enhance their offerings and capabilities.

- The introduction of DeepSeek's new models aims to position the company as a formidable competitor against industry giants like Google and OpenAI, reflecting its commitment to innovation in complex reasoning capabilities. Mistral's launch of the Mistral 3 family, which includes ten models under the Apache 2.0 license, signifies its strategic move to cater to diverse applications across devices.

- The ongoing advancements from both DeepSeek and Mistral underscore a broader trend in the AI industry towards open-source solutions and smaller, more efficient models. This shift is indicative of a growing recognition that smaller models can outperform larger ones in terms of efficiency, as companies seek to foster distributed intelligence and enhance accessibility in AI technologies.

— via World Pulse Now AI Editorial System